Volumez DIaaS for AI/ML

The rapid adoption of artificial intelligence and machine learning systems in every industry has created an unprecedented scaling challenge as businesses convert data from knowledge into intelligence. These advanced use cases can be solved now with Volumez’ Data Infrastructure as a Service (DIaaS) for AI/ML where storage, compute, and networking resources are abstracted from their physical locations and intelligently orchestrated to optimize the cloud data infrastructure across the AI/ML data pipeline. Volumez makes AI/ML data services highly performant, scalable, and efficient across any cloud, keeping GPU utilization near 100%, reducing training times, increasing model accuracy, improving data scientist productivity with parallel jobs, and automating data infrastructure tasks in complex MLOps environments.

Volumez breaks through the AI/ML performance barrier with unprecedented results in the MLPerf® Storage 1.0 AI/ML Training Benchmark

We’re honored Volumez has been named the Undisputed Leader in the first MLCommons® MLPerf Storage Benchmark for AI/ML Training workloads. Volumez’s impressive results demonstrate superiority on cloud-native data infrastructure.

Performance

Train larger models in less time with the Volumez DIaaS for AI/ML platform. Volumez delivers 1.1TB/sec throughput, 92.2% GPU utilization, and 9.9M IOPS of guaranteed performance to each GPU server, enabling ML engineers and MLOps to deliver better, faster business insights.

Scalability

Efficiency

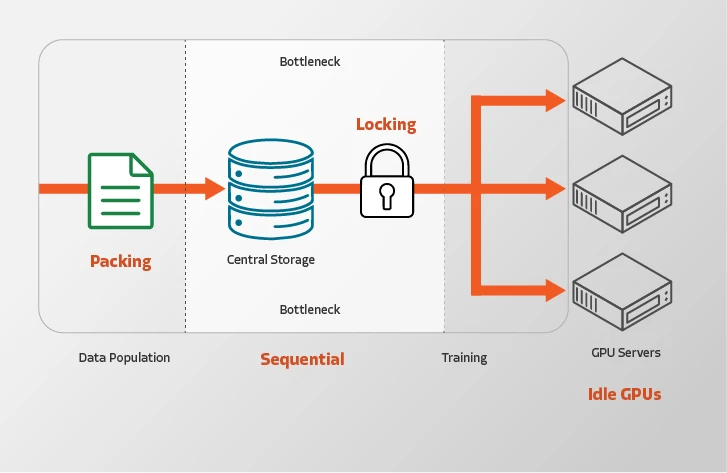

Today’s Problems

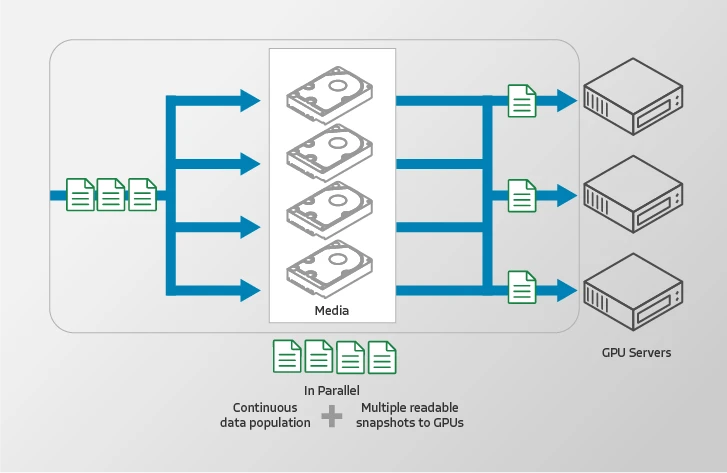

Volumez Solution